As we introduced yesterday, OpenAI has launched a new, more powerful solution. It's called GPT-4o. And the video we have for you is a crazy AI demo for the iPad. Students who use these tools will change teaching methods forever.

If you didn't watch yesterday's OpenAI event, I highly recommend you do so. The main news is that the latest GPT-4 model works perfectly with any combination of text, audio and video.

This includes the ability to "show" a GPT-4o app a screen recording you capture from another app — and it's this ability that the company demonstrated with a crazy demo explaining the iPad's AI.

GPT-4o: OpenAI stated that the letter "o" stands for "omni".

Exactly, we doubt. After all, is it going to be zero or x?

GPT-4o ("the" in "omni") is a step toward more natural human-computer interaction - it accepts as input any combination of text, audio, and images and outputs any combination of text, audio, and images.

It can respond to voice input in just 232 milliseconds, with an average of 320 milliseconds, which is similar to a human's response time in conversation […] GPT-4o is particularly better at understanding vision and sound than current models.

Even the sound aspect is very important. Previously, ChatGPT could accept voice input, but converted it to text before using it. Hey Conversely, GPT-4o effectively understands audioTherefore, the conversion stage has been completely skipped.

As we mentioned yesterday, free users also have access to many features that were previously limited to paying subscribers.

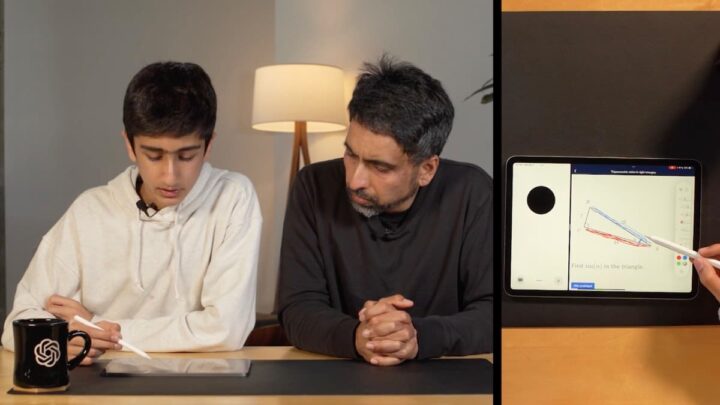

AI demo for iPad

One of the capabilities demonstrated by OpenAI is the ability of GPT-4o to see what you are doing on the iPad screen (in split-screen mode). The example shows an artificial intelligence explaining a math problem to a student.

You can hear that at first GPT-4o understood the problem and wanted to solve it immediately. but The new model may be discontinued In this case, you are asked to help the student solve the problem.

Another ability that appears here is that the model claims to detect emotions in speech, and can also express its own emotions. This may have been a bit overdone in the beta, and we may ultimately interpret the AI as a bit condescending. But all this can be modified.

Effectively, every student in the world can have a private tutor with this kind of ability.

To what extent will Apple embrace this matter?

We know that AI is a major focus of iOS 18, and that Apple is finalizing a deal to bring OpenAI features to its devices. Although this was described at the time as being for ChatGPT, it now seems very likely that the actual deal is for access to GPT-4o.

But we also know that Apple has been working on its own AI models, with its own data centers running its own chips. For example, Apple has been working on its own way to let Siri understand app screens.

So we don't know exactly what GPT-4 capabilities the company will include in its devices, but this feature seems so perfect for Apple that I have to believe it will be included. This is really using technology to empower people.

“Coffee trailblazer. Social media ninja. Unapologetic web guru. Friendly music fan. Alcohol fanatic.”